Context is Everything: You Only Get What You Paid For

What determines the quality of an LLM’s output?

The obvious answer: the input. Garbage in, garbage out. This is true, but incomplete - because most people have a narrow view of what “input” means. They think of the prompt. The question they type. Maybe the system instructions if they’re sophisticated.

But the real input is everything in the context window: your prompt, loaded instructions, retrieved documents, conversation history, tool outputs. Your 50-token prompt sits in a window of 100,000 tokens - and all of it shapes the output.

This is why “prompt engineering” only gets you so far. The real skill is context engineering.

Enter Context Engineering

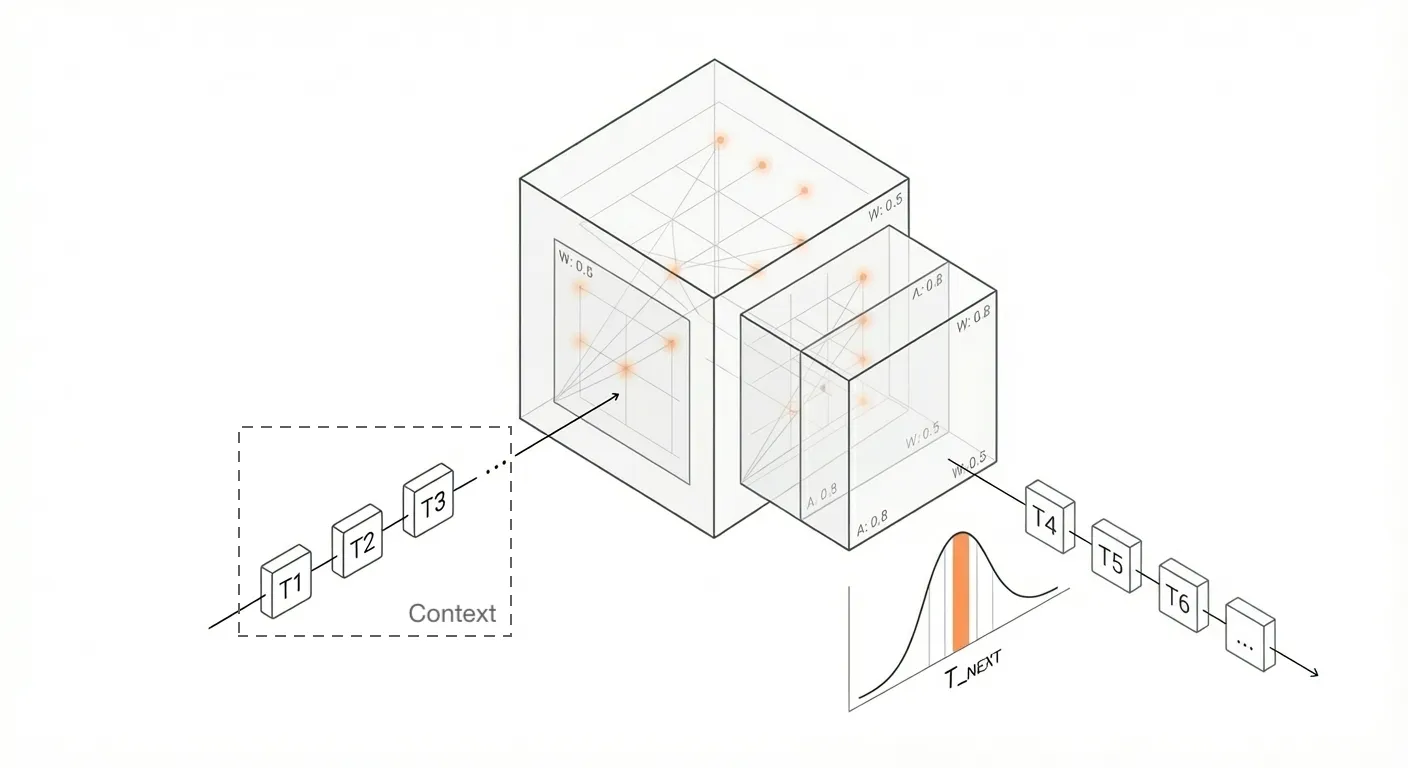

An LLM is a function. It takes input tokens, runs them through billions of parameters, and produces output tokens based on probability distributions. That’s it. No magic. No understanding in the human sense. Just an extremely sophisticated next-token predictor.

Two factors determine output quality:

- Model capability - the parameters, training data, architecture. You can’t change this.

- Context - the tokens being fed into the model. You control this entirely.

The context is the only thing the model can see. It has no memory. It has no persistent state. It cannot “remember” your previous conversations unless those conversations are placed in the current context. Every inference is a fresh start from whatever tokens are in the context.

The model doesn’t know who you are. It doesn’t know what project you’re working on. It doesn’t know your codebase, your conventions, your preferences. Unless you tell it. In this context. Right now.

The context has a size limit. Only a certain amount of tokens can fit into it. Modern commercial models usually has a context window ranging from 128K to 200K tokens. Somewhat similar to the size of memory in early PCs.

Context realestate is scarce. So, it’s important to use it wisely.

Context engineering is the discipline of systematically controlling what goes into the context.

What’s in the Context?

Everything in the context is tokens. Your prompt, system instructions, conversation history, tool outputs - all converted to tokens and fed into the model together. There’s no separate “memory system” or “knowledge base.” When an AI seems to “remember” something, that information was literally injected into the context before your message.

Here’s how modern AI tools like Claude Code or Cursor compose context:

| Component | What It Is | When It Loads |

|---|---|---|

| System Prompt | Role definition, boundaries, behavior | Always present |

| User Prompt | Your current task | Real-time |

| Skills/Rules | Conventions, SOPs, domain knowledge | On-demand |

| Memory | Past interactions, preferences | Retrieved |

| Tool Results | File contents, API responses, searches | After tool execution |

The key insight: not everything loads at once. Good AI tools use progressive loading - system prompts are always present, but skills load based on what you’re working on. Open a Python file, Python conventions load. Switch to TypeScript, different rules apply. This keeps context focused and relevant.

The Agent Loop

Here’s what makes modern AI coding tools powerful: they don’t just answer once. They run in a loop.

The model thinks, acts (calls tools), observes the results, and iterates. Each turn of the loop updates the context with new information. The read_file tool isn’t just about reading files - it’s a context-loading mechanism. Same with search, same with API calls.

This architecture has three key mechanisms:

- Static knowledge (Skills) - tells the AI how to do things: conventions, patterns, standards

- Dynamic tools (MCP) - lets the AI fetch information and take actions: read files, query databases, call APIs

- Loop mechanism (Agent) - lets the AI keep going until the task is complete: iterate, refine, verify

The magic isn’t in the model. It’s in the orchestration - the system that decides what goes into context, when, and how.

MCP: The Undervalued Context Loader

Everyone talks about MCP as a way to give LLMs “tools” - the ability to not just “think” but also “act”. Call APIs. Query databases. Execute code. True enough.

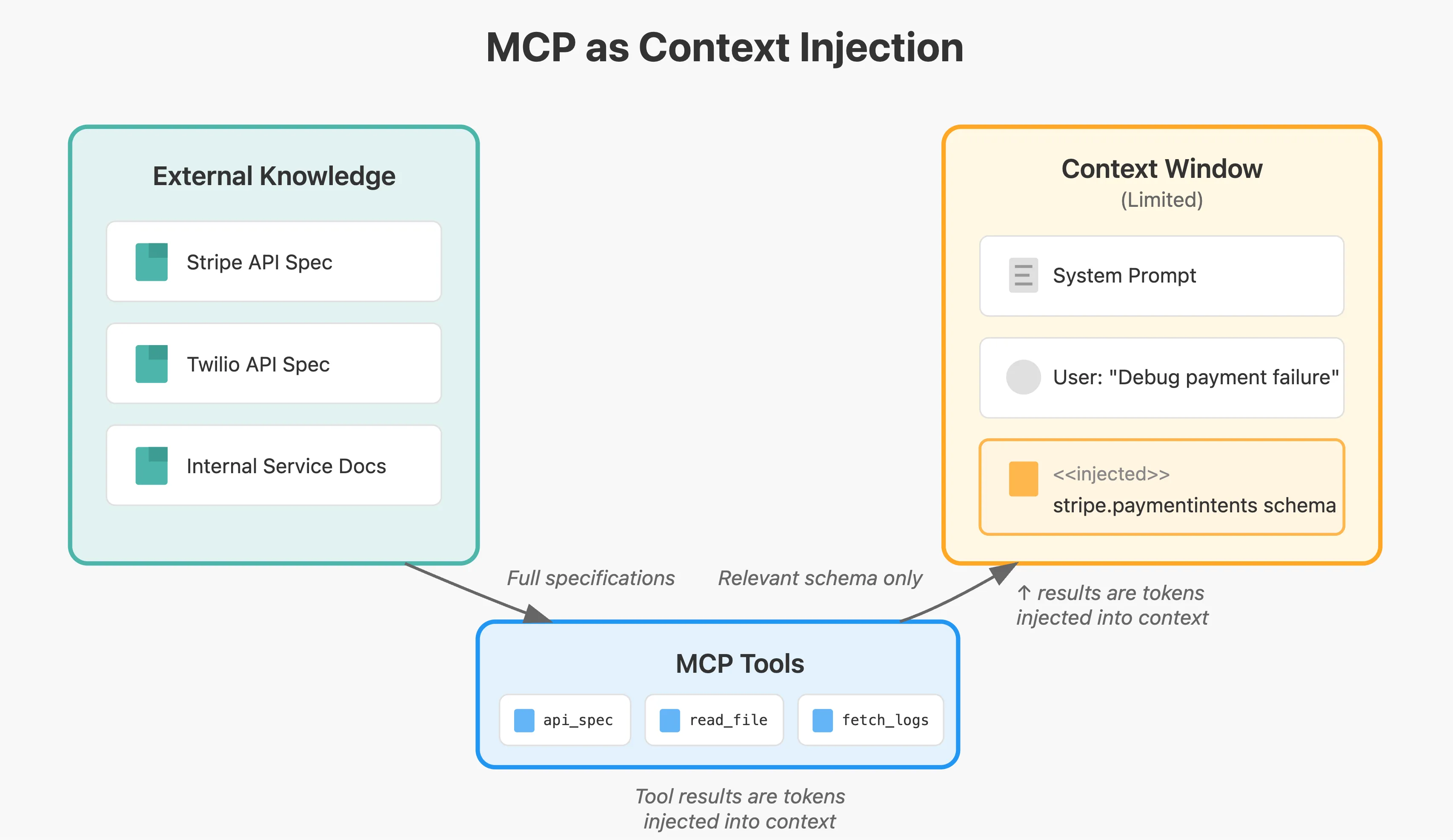

But the more important capability is underappreciated: dynamic context injection.

Consider this: you’re debugging a payment failure. The agent reads your code and sees a call to stripe.paymentIntents.create(). It needs to understand what parameters that endpoint accepts, what it returns, how it handles errors.

That information isn’t in your codebase. It’s in Stripe’s API documentation. The agent could hallucinate based on training data—maybe accurate, maybe stale. Or it could use an api_spec tool to fetch the current API schema for that endpoint.

Now the agent isn’t guessing. It’s reasoning from actual specifications, injected into context at the moment they became relevant.

Essentially, MCP can be an on-demand context loading mechanism. It’s not only a knife, a hammer, a tool. It is also a book, a consultant, a context loader.

The tool call itself is just a way to trigger the load. The real value is that the tool returns information the model couldn’t otherwise access—and that information becomes part of the context for subsequent reasoning.

The agent didn’t need Stripe’s entire API surface. It needed one endpoint’s schema at one moment. Tools make that precision possible. The context determines the output. The tools shapes the context.

The Skill Shift

The industry talks about AI replacing programmers. That’s not quite right. What’s happening is a shift in what the core skill is.

For decades, the bottleneck was translation: turning requirements into code. Programmers who could hold complex systems in their heads and express them in syntax had rare, valuable abilities.

With AI, translation becomes cheap. The bottleneck moves to both ends of the model.

On the input end, it’s about context management: knowing what information the model needs, how to get it into context, how to structure it effectively, and how to iterate when the first result isn’t right. This requires understanding how systems work, not just what they do - thinking about information flow, visibility, and the constraints of the medium.

On the output end, it’s about quality review: vetting the AI’s work, assessing its quality, and providing feedback to make it better. This requires the same software engineering expertise it always did - acquired through reading computer science literature and pulling late nights fixing bugs, not skimming AI-generated summaries.

AI can write as many lines of code as it wants. Who decides what to keep and what to throw away? Humans.

The engineers who thrive with AI tools aren’t the ones with the best prompts. They’re the ones who understand the model and know how to operate it from both ends.

The Reframe

Here’s the mental model to carry forward:

Stop thinking about prompting. Start thinking about context.

Every interaction with an LLM is an exercise in information architecture. What does the model need to know? Where will that information come from? How will it get into the context window? What should be loaded upfront, what should be fetched on-demand, and what should the model go find itself?

The model is a fixed capability. A very impressive fixed capability, but fixed nonetheless. You can’t make it smarter. You can’t make it more knowledgeable than its training allows.

What you can do is ensure it has the right information when it needs it. That’s context engineering. That’s the lever you actually control.

Everything is tokens. The context window is the only reality the model knows. Your job is to make that reality sufficient for the task at hand.

Subscribe

I don't write often, but if you want to get new posts delivered to your inbox, feel free to subscribe.